After nearly a decade in medical education, I’ve seen AI quietly reshape both learning and clinical practice. But as educators, clinicians, and patients, are we asking the right questions about its use?

Too often, AI tools are implemented without transparency, oversight, or patient consent. Some platforms even guide users on capturing patient data—without clear governance—raising serious concerns about privacy, ownership, and compliance.

💡 Questions we all need to ask:

🔹 Patients: Is AI being used in my care? How is my data stored and used? Can I access my AI-generated records? Can I opt out?

🔹 Educators & Institutions: Are AI tools aligned with privacy laws? Have vendor agreements been updated to reflect AI-driven features?

🔹 Developers & Policymakers: Is AI implementation transparent and ethical? Are there built-in safeguards? Does the tool comply with FIPPA/HIPAA?

AI itself isn’t good or bad—it’s a tool. But without scrutiny, it can become a liability rather than an asset.

🔍 Never assume AI is being used ethically. Ask the hard questions. Demand clear answers. Protect patient trust.

~ Jacqueline

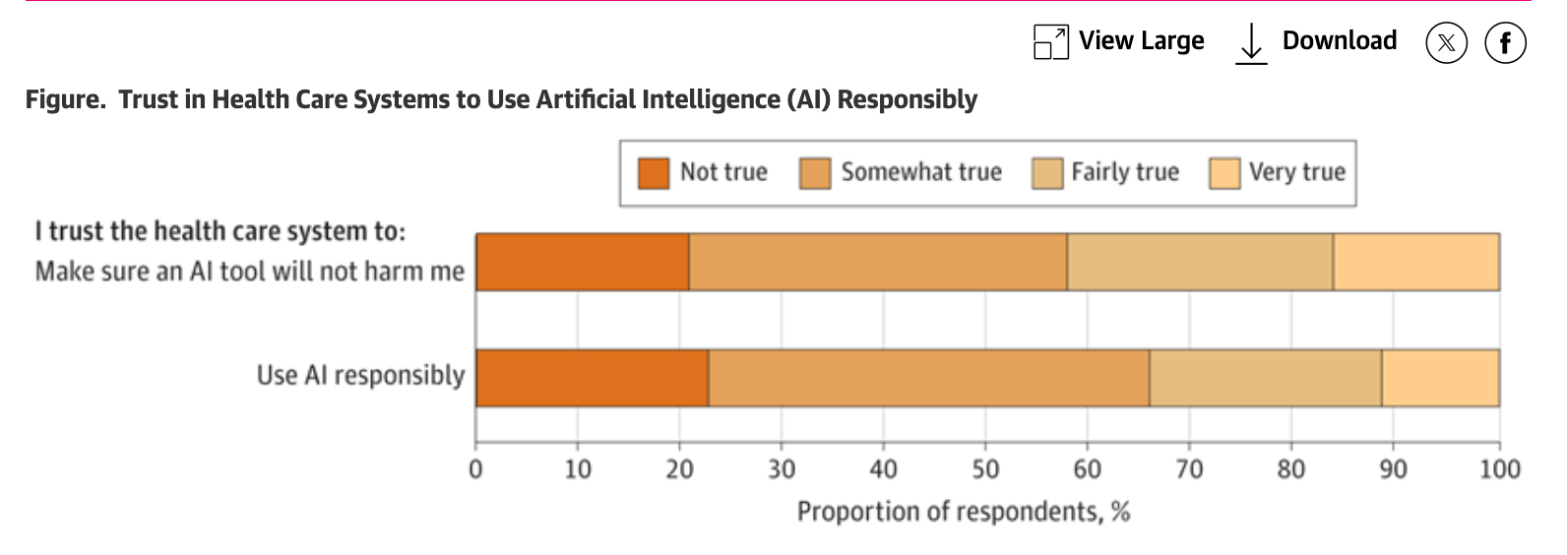

Learn more on Patients’ Trust in Health Systems to Use Artificial Intelligence via JAMA