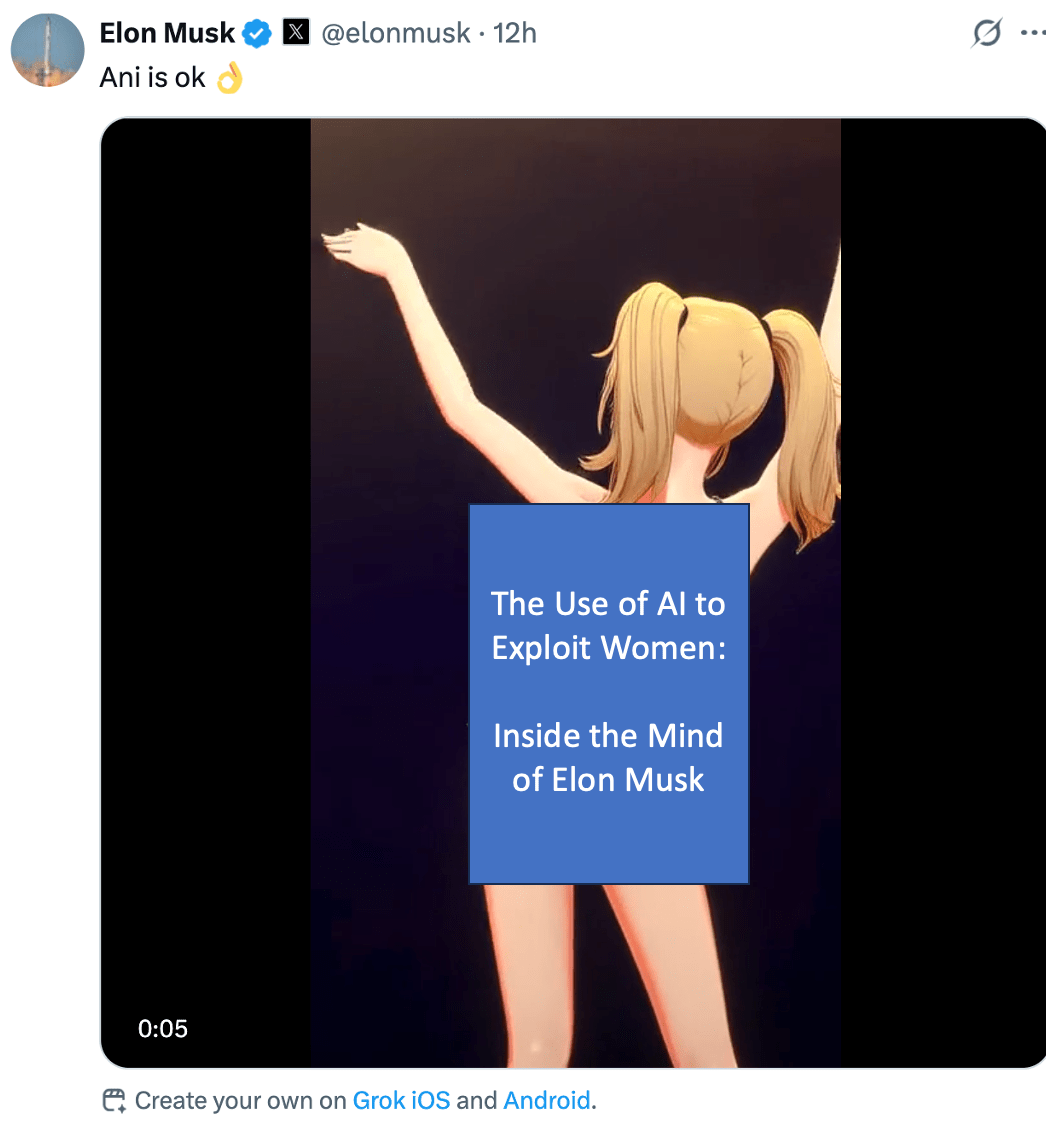

The Use of AI to Exploit Women: Inside the Mind of Elon Musk

“Evie, 21, was on her lunch break at her day job last month when she got a text from a friend, alerting her to the latest explicit content that was circulating online without her consent. ‘It felt humiliating.'”

Since Elon Musk’s release of “spicy” mode on X, I’ve observed a dramatic increase of deepfake sexual images of women being used on his platform for a variety of purposes. Whether it’s a doctored image of Taylor Swift or someone else’s daughter, the fact that Elon Musk’s AI platform is encouraging the generation of non-consensual deepfakes and branding it as “spicy mode” or “creative” is both incredibly disturbing and revealing about the man. It shows us exactly where Musk’s head is at: a Jeffrey Epstein–like mindset where women’s dignity is disposable, and profit is the name of the game.

As a woman, I’ve been warned by society that there will be people in this world who will use AI as an exploitative weapon to harm, to harass, and to make money at the expense of one’s identity and mental health. What I didn’t expect was that someone like Elon Musk, who loudly calls for deporting sexual predators and releasing the Epstein files, would be the one leading this charge.

As AI platforms expand into sexually explicit content, policy must keep pace: consent, transparency, and accountability should be non-negotiable.

So the next question is, where are we at with our Canadian policies? Currently, it’s all talk and no action. This silence speaks volumes about the role of our politicians in the exploitation of its constituents and their children.

1. Bill C‑63 (Online Harms Act): Proposed, died in 2025.

2. Bill S‑209 (Protecting Young Persons from Exposure to Pornography Act): Proposed, age-verification focus.

3. Artificial Intelligence and Data Act (AIDA) under Bill C‑27: Under consideration.

More on the topic:

Sex is getting scrubbed from the internet, but a billionaire can sell you AI nudes: https://www.theverge.com/internet-censorship/756831/grok-spicy-videos-nonconsensual-deepfakes-online-safety

Their selfies are being turned into sexually explicit content with AI. They want the world to know: https://www.usatoday.com/story/life/health-wellness/2025/07/22/grok-ai-deepfake-images-women/85307237007/

Exploitation