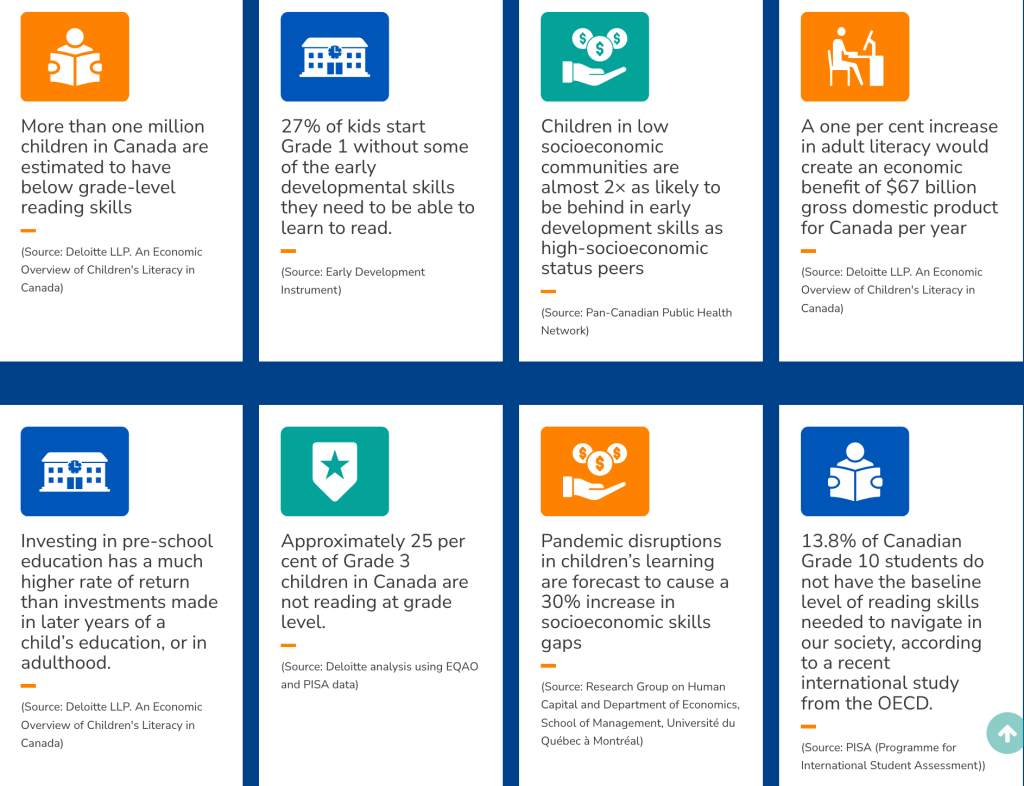

OBJECTIVE

Concussions can occur at any level of ice hockey. Incidence estimates of concussions in ice hockey vary, and optimal prevention strategies and return-to-play (RTP) considerations have remained in evolution. The authors performed a mixed-methods study with the aim of elucidating the landscape of concussion in ice hockey and catalyzing initiatives to standardize preventative mechanisms and RTP considerations.

METHODS

The authors performed a five-part mixed-methods study that includes: 1) an analysis of the impact of concussions on games missed and income for National Hockey League (NHL) players using a publicly available database, 2) a systematic review of the incidence of concussion in ice hockey, 3) a systematic review of preventative strategies, 4) a systematic review of RTP, and 5) a policy review of documents from major governing bodies related to concussions in sports with a focus on ice hockey. The PubMed, Embase, and Scopus databases were used for the systematic reviews and focused on any level of hockey.

RESULTS

In the NHL, 689 players had 1054 concussions from the 2000–2001 to 2022–2023 seasons. A concussion led to a mean of 13.77 ± 19.23 (range 1–82) games missed during the same season. After cap hit per game data became available in 2008–2009, players missed 10,024 games due to 668 concussions (mean 15.13 ± 3.81 per concussion, range 8.81–22.60 per concussion), with a cap hit per game missed of $35,880.85 ± $25,010.48 (range $5792.68–$134,146.30). The total cap hit of all missed games was $385,960,790.00, equating to $577,635.91 per concussion and $25,724,052.70 per NHL season. On systematic review, the incidence of concussions was 0.54–1.18 per 1000 athlete-exposures. Prevention mechanisms involved education, behavioral and cognitive interventions, protective equipment, biomechanical studies, and policy/rule changes. Rules prohibiting body checking in youth players were most effective. Determination of RTP was variable. Concussion protocols from both North American governing bodies and two leagues mandated that a player suspected of having a concussion be removed from play and undergo a six-step RTP strategy. The 6th International Conference on Concussion in Sport recommended the use of mouthguards for children and adolescents and disallowing body checking for all children and most levels of adolescents.

CONCLUSIONS

Concussions in ice hockey lead to substantial missed time from play. The authors strongly encourage all hockey leagues to adopt and adhere to age-appropriate rules to limit hits to the head, increase compliance in wearing protective equipment, and utilize high-quality concussion protocols.

Learn more on Concussions in ice hockey: mixed methods study including assessment of concussions on games missed and cap hit among National Hockey League players, systematic review, and concussion protocol analysis via Journal of Neurosurgery.

Image of JT Miller and Filip Hronek. Wear your helmet during warm up Hronek… 🙂