🎉 Excited to be facilitating two sessions at the 2025 CHES Celebration of Scholarship hosted by the UBC Centre for Health Education Scholarship.

🗓 October 22, 2025

📍 Robert H. Lee Alumni Centre, UBC

🔹 Round Table Discussion (8:30–9:15am)

“DocBot 101: Making Sense of AI Before It Makes Sense of You”

Co-facilitated with Dr. Meera Anand, this interactive session invites educators and researchers to explore how we can prepare learners to critically engage with AI before it defines the terms for them.

🔹 Oral Presentation (2:15–3:15pm)

“Swipe Right on Clinical Reasoning: Med Students Date the Future (It’s AI)”

I’ll be sharing insights on how generative AI is reshaping clinical reasoning and what this evolving relationship means for medical students and educators.

Grateful to CHES and UBC CPD for supporting meaningful dialogue in health professions education. Looking forward to connecting with colleagues who are navigating and shaping this rapidly changing space and technology.

Liability

AI is entering the exam room—who’s liable if it gives the wrong prescription?

In this must-read piece, Solaiman & Malik (2024) dissect the evolving EU legal landscape for algorithmic care, where the Artificial Intelligence Act meets real-world clinical complexity. Doctors are no longer the only ones with decision-making power—AI systems are being trained, deployed, and (occasionally) hallucinate diagnoses with remarkable confidence. 🙂

But when the AI makes a mistake… who takes the fall? The doctor? The developer? The data itself?

This paper explores how regulatory frameworks are shifting the traditional doctor–patient model, nudging us into a new triangle: doctor–patient–algorithm. Spoiler alert: Only one of them has a CE marking.

🌍 The EU’s AI Act is more than just red tape—it’s an attempt to ensure transparency, accountability, and safety in algorithmic care. And if you’re in healthcare or med ed, this isn’t just legalese—it’s your future.

✨ Favourite quote?

“AI’s growing sophistication presents unique challenges that threaten to erode the autonomy gained by disempowering patients and doctors alike and shifting controls to external market forces. Although AI’s potential to enhance diagnostic accuracy and support informed decision-making seems promising, it risks over-reliance by doctors, diminished personal interaction with patients, and raises concerns about data privacy, opacity, and accountability.”

🩺 Doctors may need to add “algorithm whisperer” to their CVs.

Read the full article here: https://academic.oup.com/medlaw/article/33/1/fwae033/7754853?login=false

Rise in HIV

“HIV does not respect borders. We have seen an increase in the number of HIV cases in British Columbia, and more than two-thirds of those cases are cases that come into the province with HIV from other jurisdictions.”

– Dr. Julio Montaner, executive director of the BC Centre for Excellence in HIV/AIDS,

Two troubling trends are converging in the rise in HIV cases here in BC and abroad.

In one corner: Rising HIV Incidence in B.C. & Canada at Large

At the BC Centre for Excellence’s national HIV summit (June 6, 2025), experts sounded the alarm on a 35 % jump in HIV cases in Canada from 2022 to 2023, with rates continuing upward in 2024 and 2025. They emphasized that cuts in global HIV funding—particularly U.S. support through PEPFAR and USAID—are threatening domestic progress, jeopardizing Prevention-as-Treatment (TasP®) and PrEP strategies.

In the other corner: U.S. Aid Destruction: Millions in HIV Drugs & Contraceptives Left to Waste

Reports indicate USAID stockpiled roughly $12 million worth of HIV‑prevention drugs and contraceptives destined for developing countries—but under a recent executive order, they’ve been stranded in U.S. warehouses since January 2025. With expiration dates looming, these vital supplies risk being sold off or destroyed. Former USAID leadership is urging the administration to release or donate rather than destroy—warning that bureaucratic paralysis now threatens hundreds of thousands of lives.

Why These Trends Connect — and Why You Should Care

1. Lost U.S. donations means fewer drugs reaching communities in need internationally—and a lost opportunity to repurpose that stock locally or support Canada.

2. Rising HIV in Canada is a wake-up call: even with progressive domestic policies, we’re vulnerable—especially when global systems falter.

3. Global solidarity matters: U.S. aid cuts ripple globally; local healthcare programs rely on international collaboration to fill gaps.

This is a time when we need to work together.

Read more here on B.C. experts sound the alarm over rising number of HIV cases: https://vancouversun.com/news/bc-experts-alarm-rising-hiv

Read more at Trump administration to destroy vital HIV meds and contraceptives worth $12 million following closure of USAID: https://economictimes.indiatimes.com/news/international/us/trump-admin-set-to-destroy-vital-hiv-meds-and-contraceptives-worth-12-million-following-closure-of-usaid/articleshow/121786727.cms?from=mdr

Mapping

“It is interesting to note that there is so much evidence regarding the intervention of these digital systems in medical education that it is practically impossible for the human mind to summarize. It is stated that to ensure the success of AI in medical education, it is crucial to foster interdisciplinary collaboration and increase investment in education and training.”

Just published: A scoping review mapping the integration of AI in undergraduate medical education (April 2025)

A new review (34 studies analyzed) highlights the global patchiness in how AI is brought into med school curricula. While AI tools like intelligent tutoring systems, chatbots, and VR simulations are gaining ground, there’s no unified framework yet. Key takeaways:

1. AI is best introduced as a supportive tool—enhancing ethics, digital competency, and collaboration—not merely a standalone subject.

2. Teaching must go beyond technical know-how: it needs to embrace ethical reasoning, patient‑centered care, and systems thinking. (See Deborah Lupton’s book on Digital Health for more in this area)

3. Institutional hurdles persist: from lack of faculty training to resource gaps.

4. There’s a critical need for adaptable, globally informed curricula.

We need standardized yet flexible frameworks to train tomorrow’s physicians to use AI responsibly and effectively. Let’s foster interdisciplinary collaboration (ethics + data science + clinical practice) and expand institutional support for curricular reform.

Read more on “Mapping the use of artificial intelligence in medical education: a scoping review”: https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-025-07089-8

WTF Lab?

I didn’t mean to start a company—I just had a school assignment.

As part of my Oxford program, I was asked to co-design a digital health innovation. But I didn’t want to write about a hypothetical idea—I wanted to build something real.

So I created WTF Lab? It’s a health data translator for people who’ve ever stared at their lab results or medication list and thought:

“What the f— does this mean?”

WTF Lab? helps patients:

+ Upload and track labs, medications, and symptoms

+ Visualize progress

+ Understand what’s actually happening in their bodies

It’s not built yet—but it’s built to matter.

I’m looking for collaborators—developers, designers, digital health thinkers, and patients—who want to make health data more human, less overwhelming, and a lot more clear.

WTF Lab? started as a school project.

Now I want to see it through.

wtflab.wtf

hashtag#buildinpublic hashtag#digitalhealth hashtag#oxford hashtag#healthtech hashtag#wtflab hashtag#healthliteracy

LOGO

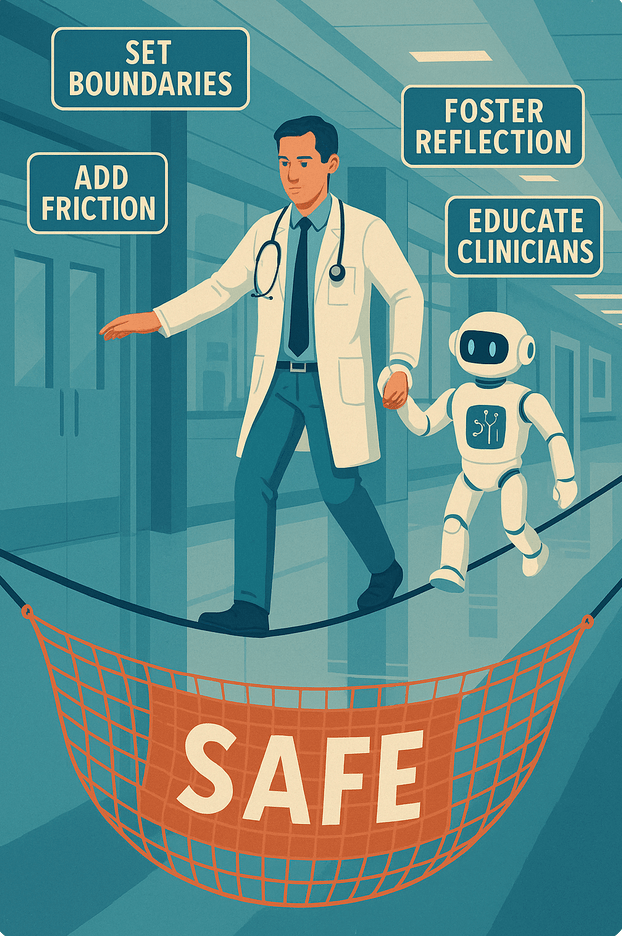

SAFE AI

We’re entering a new era in healthcare and medical education—one where AI is increasingly integrated into tasks such as clinical reasoning and decision support. To support clinicians and educators in using AI responsibly, we developed the SAFE approach:

S: Set Boundaries: Define what AI can and cannot do in your clinical context.

1. No final diagnoses, prescriptions, or critical decisions.

2. Use for drafts, brainstorming, summaries, or language simplification.

3. Establish clinical red lines where human judgment is non-negotiable.

A: Add Friction: Prevent blind reliance with built-in checks.

1. Label all AI-generated text: “Machine-generated. Verify before use.”

2. Require human review before integrating into EMRs.

3. Add pop-up reminders or checklists before clinical use.

F: Foster Reflection: Create space for metacognition.

1. Debrief as a team: “Where did AI help us? Where did it overreach?”

2. Reflect not just on workflow, but on thinking habits.

3. Make critical thinking part of every AI-assisted task.

E: Educate Clinicians: Build AI literacy as a core clinical skill.

1. Explain how language models work—and where they fail.

2. Share real stories of hallucinations, bias, and misfires.

3. Use vignettes to spark ethical reflection and dialogue.

As this technology evolves, it’s crucial to recognize that AI is less about being “artificial” or “intelligent,” and more about automation and translation. It does not replace human judgment—it requires it.

Timing

When Should Learners Meet AI? And perhaps more importantly—how?

In this powerful piece, “We, Robots” via Persuasion, writer Kate Epstein makes a compelling case: AI isn’t just a tool—it’s a value system. And if we hand over too much too soon, we risk outsourcing not only decisions, but the very process of learning, judgment, and ethical reasoning.

As someone working in medical education, this hits close to home.

👩⚕️ Do we teach students to work with AI—or to defer to it?

⚖️ When do they learn to question, interpret, or even override algorithmic outputs?

🚩 And what happens when AI becomes more than just assistive tech, but an invisible authority?

A critical read for educators, clinicians, and anyone thinking about the future of knowledge, power, and responsibility in the age of intelligent machines.

Read more here: https://www.persuasion.community/p/we-robots

Zebra

Scientists want to understand how animals behave together in groups, like schools of fish. This study used virtual reality and robot fish to figure out how young zebrafish respond to others when swimming in groups. The researchers created a virtual world where real fish could interact with robot fish, allowing them to closely study how fish make movement decisions based on what they see.

They discovered that fish tend to follow one or more targets using a simple rule based on the fish’s own point of view. This behavior worked well even when the fish didn’t have all the information—showing how reliable it is. The team called the rule “BioPD,” a basic type of control system.

Because this kind of following behavior is important in designing self-driving vehicles (like drones or underwater robots), they tested BioPD in these systems. It worked really well, needing very little customization, which shows that nature’s simple strategies can help improve technology too.

Reverse engineering the control law for schooling in zebrafish using virtual reality via Science Robotics.

Revolutionary to Receivership

From Revolutionary to Receivership: When Cannabis Promises Go Up in Smoke

You believe in cannabis reform — health, justice, access.

You don’t believe in empty slogans.

Enter Revolutionary Clinics, co-founded by Greg Ryan Ansin.

Trauma-informed care, community wellness, healing centers — it all sounded good.

Until Fitchburg residents started filing odor complaints.

Until regulators started asking questions.

Until the apologies started piling up.

“I feel like I’ve been on an eight-month apology tour…”

Eight months of apologies?

That’s not innovation. That’s mismanagement.

What Actually Happened:

- $120,000 fine for illegal vape sales

- Four months’ probation

- Blamed the lab tech (he was fired)

- Closed their Cambridge location after a landlord lawsuit: Revolutionary Clinics, a cannabis company with a cultivation and production facility in Fitchburg and four dispensaries in Massachusetts including Leominster, is facing a lawsuit accusing the company of owing $279,570 in unpaid rent at its dispensary in Cambridge’s Central Square.

- Owes nearly $10 million — and now in receivership. Read the court filing here.

Meanwhile, in Fitchburg, the smell and the silence lingers.

This isn’t about weed.

It’s about wellness-washing — branding over safety, slogans over standards. If this were any other healthcare setting, we wouldn’t call it a “rough patch.”

We’d call it a leadership failure.

Progress means:

- Showing up.

- Owning mistakes.

- Protecting the public — not just the bottom line.

When a cannabis exec calls it an apology tour, it’s time to stop clapping and start asking hard questions.

Because real revolutions smell like change — not ethanol.

References:

Following Landlord Lawsuit, cannabis company with Fitchburg Cultivation Closes Cambridge

Revolutionary Labs Slapped with a $120,000 Fine and Probation

Revolutionary Clinics is $10,000,000 in debt.

Fitchburg cannabis firm fights odor issues as it seeks approval for renovation